Where are the future technologies that drive cameras forward?

The consumer electronics world is filled with dead-end technologies and things that didn't quite pan out the way people thought they would. One fairly recent casualty, for instance, is 3D TVs. Yes, I know someone out there will say they love their 3D TV, but overall, adding 3D to TV sets didn't produce even the teeniest blip of new TV purchasing. At a recent Consumer Electronics Show (CES), 3D was generally only mentioned as a footnote on new TVs. Ultra HD was the new wanna-be buzz of the rapidly declining TV industry.

In retrospect, the reason 3D TV failed is easy enough to identify (some of us were on record prior to the introduction of the first consumer 3D TVs that this wouldn't be a winner, though ;~). In a world where most people had already bought a large screen HD TV, was the addition of 3D enough to compel them to replace it with a 3D HD TV? The answer was no, and that was partly due to the lack of 3D content offerings, but more importantly due to a misunderstanding of how people actually use their TVs (they don't want to be bound to the set wearing 3D glasses; believe it or not, most television "viewing" is actually listening--people tend to be multitasking while watching TV; this was one of the reasons why I originally felt that 3D wouldn't be the thing that saved the TV makers).

Okay, but what about cameras?

I use the TV example for a reason: the engineering and decision making that go into cameras is being done by similar groups in Japan using similar logic as has been used in other consumer electronics products coming out of Japan. That track record isn't exactly great, as there's an overemphasis on unique technologies and less interest in what's actually useful to consumers. I tend to put it this way: "Japan wants to sell boxes based upon a technology claim; customers want to buy things that solve their problems."

Future technologies are actually relatively easy to see ahead of time. Because they go from ideas to research projects to patents to development long before they get into products, anyone who is well connected to the research community can generally see the technology options long before they get into products. The trick is to understand what the technology enables and whether that actually solves a tangible user problem.

I spent most of my career in Silicon Valley doing just that: examining what was in the R&D hoppers and coming up with products that would make a real difference to users. I won't go into the details of that, but suffice it say that I was involved early with many products using new technologies, some of which are still not quite out today. Digital cameras for computers (now embedded in most laptops and displays) was one of the ones that I was at the forefront of.

So the question is this: what technologies are in the hopper for cameras?

Light Field

One is light field photography, basically what the Lytro camera did (before it failed in the market). You put multiple small lenses far in front of the sensor and compute how the light from those lenses got to the sensor, which gives you the ability to do things like refocus the image after the fact. Think bug eyes. Toshiba recently announced a small sensor with light field capabilities, so clearly we're now past the idea stage, past the research project stage, and deep in the patent and development stage.

So what's the user problem that light field solves? While Lytro and others have pointed to a few other benefits, the basic gain with light field cameras for most people is focus, as you can "fix" focus after the fact. Here's the problem: to get that focus benefit we have to give up pixels. Enough pixels that we end up back where we were in the late 1980's with digital imaging capability.

Does the user benefit of light field focus outweigh the decrease in pixels? Not currently, and maybe not ever. Because focus itself is not a fixed technology and is moving forward too. The primary thing the user wants light field for is that their current camera doesn't get the focus "right," not because they want to play with focus after the fact in a more complicated workflow. Thus, if current cameras can just be made to focus better (and faster), the extra pixels are a huge benefit over the light field sensors we're just now putting into production.

Note that most of the significant cameras announced in the last year featured phase detect and faster autofocus algorithms, some with AI. The technology of phase detect has moved from a separate system to being integrated on the image sensor, saving parts and cost. Everyone gets a benefit: manufacturers get cheaper-to-produce cameras, users get faster focus. Often times older technologies do just this: migrate towards cost reduction. And that migration often holds off newer technologies that require more costs for little additional benefit.

The recent improvements in SoC (System on Chip, such as Canon DIGIC, Nikon EXPEED, and Sony BIONZ) also play a part in improving focus. Sony themselves seems to be trying to move focus decision making to the image sensor itself, through stacked AI capabilities.

Light field has other benefits besides focus, but in almost all of them the same problems occur: do the costs of the new technology go far enough beyond what the old technologies can do to grab the same market? I'm willing to listen to the light field advocate's arguments on this, but my current position is "no, light field is not poised to change the camera market."

One reason for that is that you have to look at one technology against all the other available technologies and try to figure out whether one generates more downstream value than the other. It's not a frozen field: all the technologies are moving forward simultaneously, at different rates, with different gains, and the real trick is trying to figure out whether you can generate something that the user clearly sees as beneficial.

Full Color Sensors

Let's look at another leading edge technology: three-layer sensors. Foveon was very early to this, and attracted a lot of attention from the camera makers. Foveon failed as a company not because the technology wasn't good enough, but because of the company's method of "negotiating" with camera companies: they didn't get very far with their aggressive-American-with-patents-you-need approach. Coupled with Foveon's need to pay back venture capital, that put them very quickly in what we call Dead Man Walking status (enough money already in the bank or coming in via operations to stay in business, but no upside to pay back investors, loans, or grow).

Back when Foveon first appeared, I wrote that the company would fail, which produced the usual fan boy and hater negative posts coming my direction. But I didn't write that the technology would fail. My whole point was that you can't be an aggressive Gaijin who overprices oneself to the Japanese. The Japanese don't like "outside" technologies that then indebt them to large, continued royalty streams. They absolutely don't like arrogant sales presentations that tell them that there are no other options and the way they're doing it is a dead end.

But three-layer photosites are a technology that I think that everyone involved in the camera business sees as potentially providing a real, visible, tangible user benefit in the future (assuming that other problems with it are mitigated). That's why Canon, Fujifilm, Nikon, and Sony have all been working on it (ideas to research projects to patents so far) ever since Foveon came knocking.

What's the three-layer benefit? Losing the AA filter and Bayer demosaic gains a noticeable acuity benefit, all else equal. Acuity is something users see and want more of. Thus, it's clear to me that we will have three-layer sensors in our cameras of the future. At least if the technical obstacles can be overcome. But here's the thing: my position is that technical obstacles are always overcome. Maybe not as fast or as easily or as cheaply as we'd like, but man is a problem-solving machine, and three layer sensors are a problem to solve.

The challenge for Sigma—the current owner of Foveon—is whether they can move fast or well enough to keep the bigger companies from eventually just having their own, better, three-layer solutions. (Hard hat back on) My prediction is that Sigma isn't capable of staying in front. Any advantage they have today at the sensor will be lost in the not too distant future, which leaves them competing with cameras that still have catching up to do in other technologies. (Yes, here come the Sigma fan boy messages and posts; heck, some are even from the same ones that said I was wrong about Foveon's future back when I predicted they wouldn't make it as an independent company ;~).

This is another aspect that you have to look at in evaluating technologies of the future: viability. Can the first mover stay the best mover? In the case of Foveon and Sigma and three-layer sensors, the answer is likely no. In the case of Lytro and Toshiba with light field, the answer is also likely no.

Yeah, this future stuff can get tricky.

Okay, so What Does Happen?

So what else is on tap?

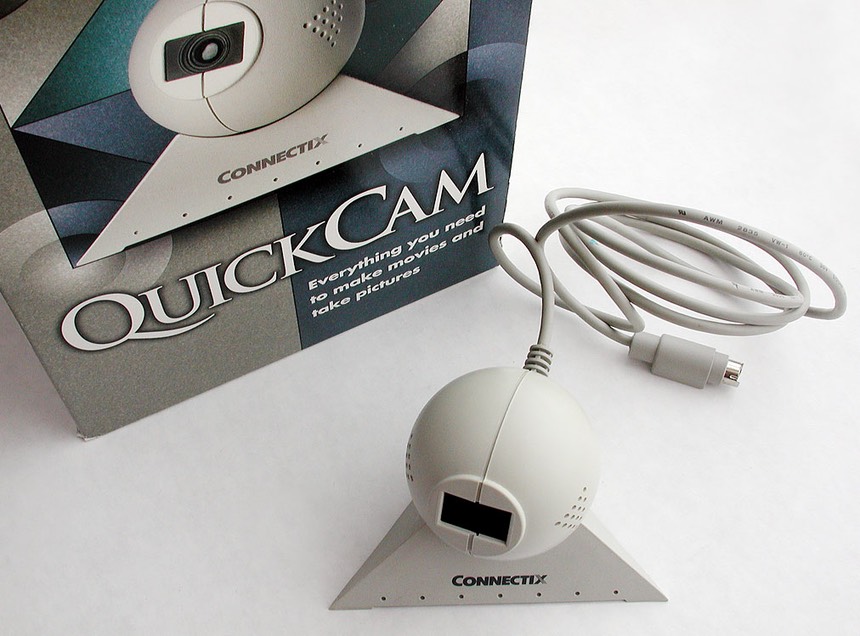

In 2002 I gave a speech about computational photography that was based upon things we had done with the QuickCam in the mid-to-late 90's. After all, my marketing definition of a QuickCam was "the fewest number of parts necessary to get image sensor data into the computer's CPU." Everything about QuickCam was computational, as the camera itself was a dumb device that did nothing but capture data and send it to the computer.

We actually have bits and pieces of computational photography scattered all over the place at the moment, but not quite the combined approach I was suggesting at the time. Panorama stitching and HDR are two pieces of the computational photography grand scene, for example. In panorama photography we use multiple pass techniques to generate data for a scene bigger than our camera can capture in one pass; in HDR we use multiple pass techniques to generate data for pixels that goes beyond what our sensors can capture at one time.

Thus, "multiple pass" is one of the key aspects of future computational photography. In what ways can we use multiple samples to extend or fix issues with our single capture? We're fairly far down that path at the moment, but there is still much more that can be done. (As an aside, the one place where light field has a chance is in multiple pass techniques; imagine being able to wave a camera at a scene and the low-resolution light field is then stitched and computed; this gets rid of the pixel deficit problem of light field and promotes one of its other gains (a quasi 3D like capture). Like I wrote earlier, it gets complicated.)

Other aspects of computational photography than multiple pass exist. Another big field is "correction." We already see bits and pieces of this, too: distortion, vignetting, and chromatic aberration correction, for example. I believe diffraction correction is even possible, as are a number of other types of correction, but the requirements for some corrections (as well as some multiple passes) are faster multiple processors and more working memory.

The biggest field for computational photography, though, is "making up things." We already see this in the Topaz Labs AI products, the Luminar AI products, the Photoshop sky replacement and subject selection functions, and we're going to see more every day. Smartphones have been particularly good at this, as they benefit from Moore's Law continuing to push semiconductor technologies forward, and the close integration between the phone's CPU and image sensors. Apple has added LIDAR to some of their products, which adds another sensor type whose data can be integrated with the image data (for instance, you can measure someone's height while taking a photo of them ;~).

One area where I've reversed course in my thinking is in video. But not for the reasons you might think.

When the D90 appeared with a video capability back in 2008, I was against further development of video in DSLR and sophisticated cameras. A lot of still photography design work stalled while the camera makers spent most of their engineering efforts on getting the video integration right.

Today we're very close to an interesting crossroads, though. Once you implement 4k video (basically double the horizontal and vertical resolution of 1080P, or 3840 x 2160 pixels), you have an 8.3mp video camera. Hmm. Didn't we have 8mp still cameras not too long ago that we thought were pretty good? (And which some folk are still using!) Hmm. Isn't 8mp about right for a traditional magazine page (at 300 dpi)?

If you're willing to make a few compromises, you can record scenes in 4k video and essentially be shooting 8.3mp stills at 60 frames per second. To put that in perspective, the Canon 1D Mark III that was the heart of many sports photographers kit starting in 2007 (just over a dozen years ago) was 10mp and 10 fps.

But now we have 8K cameras (the Canon R5, with others following from Nikon, Panasonic, and Sony soon). This pushes the still capability to approximately 33mp, which is more than most people need. And note also that we have a trend now of video cameras shooting "raw" files, so we can get around the high levels of compression in most video streams, too.

What are the compromises involved? Shutter speeds and workflow, mainly. Video shutter speeds tend to be 2x the frame rate for "normal looking" motion. For 24 fps, that's 1/48 second, which is too slow a shutter speed for good still capture of anything that's moving. So you have to crank your video shutter speed (to at least 1/100, but more likely as high as 1/500 for fast motion) which makes the video a little jarring to some (but I'll bet we can create automated interframe blur creators ;~). The other problem is that you have to "select" stills from your video stream, which involves using video editors or software products that don't yet really exist for still users. Oh yeah, one other compromise: you'll use lots of storage space doing this. But never bet against processor speed, memory size, or storage space increases in technology, so that last compromise is a bit of a moot point, I think.

Which brings me round full circle.

What are the user problems that still need solving in still cameras? Better, faster, more reliable, more intelligent focus. More precise "moments in time" (e.g. no lag, absolute precision of moment, etc.). Workflow improvement. Perhaps some better low light performance (of all types). Better user control (simpler, faster, more customizable). Not much else.

What are the camera makers iterating? Pixel counts. Larger sensor sizes. Additional features. Cost cutting.

I've seen this game before: the personal computer business. Iterate CPU speed. Increase bit width. Add more features. Cut costs. The net result was that beyond a certain point CPU speeds didn't make for a tangible user difference (same with pixel counts in cameras, I'd argue). Building 32-bit, then 64-bit sizes ditto: these benefited software developers more than users, in my estimation.

On the other hand the camera makers study each other carefully (partly because they're not very far apart from each other physically). So when one does find a technology that leads to a real user benefit—say image stabilization at the image sensor—eventually they all get on board with that. So not only do you have to move first as a camera maker, you have to move fast.

Which brings us back to where we started: you have to watch out for dead-ends. If you iterate first and fast into a dead end (3D TV, or my favorite: 3D Google TV), you use up a lot of capital to not make any real gains. Negative returns on investment are killers in the tech business, as Hitachi, Sharp, Panasonic, and Sony have found out the hard way lately.

The problem is that you need a leader with a bit of a golden gut: someone who can think several years out and see clearly what provides bang for the buck due to a user benefit and what doesn't. The knock on Apple these days is that Steve Jobs was one of those folk and people think that with him gone Apple doesn't have such ability any more. The jury on that one has proven that Apple is still Apple. Jobs was the ultimate decider, but he wasn't the sole forward thinker. Indeed, many of the things we associate with Jobs came to him from others inside and outside the company. So the question is whether the current management is as capable as past management to see the wheat from the chaff. The success of the Apple Watch and other products say that management at Apple is doing just fine.

Semil Shah wrote on TechCrunch in late December 2013: "It's early innings for digital photography." Indeed it was. Those that care to look can see the glimmer of plenty of new ideas that become research projects that become patents that become development projects that become products. I'm not going to identify them here because that's for paying customers (I sometimes consult with tech companies), but I see an acceleration of things that will be pivotal to future cameras and photography.

I happen to remember the first spell checker on personal computers, and more importantly, when the first spell checker was integrated so that it checked spelling as you wrote (easy for me to say that: I was the one who provided the hook to the leading standalone spell checker at the time to be able to react when the leading word processor, WordStar, finished typing "a word"; bingo, real time spellchecking). That was a technology that opened up new frontiers in word processing, as it also caused word processor creators to think slightly differently about their code.

We're going to see similar things happen soon in cameras, too. What if you told your camera where a photograph should go before you took it?

I get amused when people (including camera company executives) tell me something like "Android is the future of cameras." No, it isn't. It might be an enabler of something, but Android itself isn't a solution to anything other than developing a product faster (because someone else did most of the low level software coding that created a kernel and API around ARM CPUs and gave it to you for free).

So in the coming year as new camera technologies and features get announced, discussed, and promoted, do yourself a favor. Ask a single question: what's the tangible user benefit? If it isn't tangible or there isn't a benefit to the user, it won't fly. If it is tangible and provides a real benefit, you then have to process further: what stage is the technology at (idea, research, patent, development, early product deployment, maturing product)? If it isn't in at least development, then the technology isn't going to impact your current buying decisions much, if any.

Let me use an example from the past: the Nikon 1. Nikon entered the mirrorless market late. But they did so with a technology: phase detect autofocus on the sensor that worked. Yes, there was a tangible benefit to the user: the Nikon 1's were the only mirrorless cameras at the time that could follow motion well, and they were as fast as DSLRs in most focus actions. That's tangible when the rest of the bunch can't follow motion and some are even sluggish to focus at all.

Better still, the Nikon 1 was not just in development, but shipping! What we're ultimately looking for are technologies that you would buy today (all else equal).

Ah, the all else equal curse. Two things weren't equal at all: the Nikon 1 came with what customers would characterize as a thing-of-the-past sensor (small physical size, 10mp) and a higher-than-expected price. 10mp and US$900 was enough to cripple how well the Nikon 1 would compete in the actual marketplace, which was mostly 12-16mp and lower priced with more features at the time.

Still, looking back, you can see that the Nikon 1 pioneered the exact technology that Fujifilm, Nikon, and Sony are all using for autofocus now (Canon invented their own variation, Olympus went a different route, and Panasonic has yet to do phase detect AF).

So there's one final thing to think about as you look forward at new technologies: how well does the company that has that interesting new technology execute? Make mistakes in execution and the technology benefit can be washed away as quick as you gain it. The very best companies execute first, faster, and better. Not first only, or faster only, but first, faster, and better. Generally you can even ignore the first if you can do it faster and/or better.

What you don't want is to invest in companies that are last, slower, and worse. Amazingly, we have examples of that littered throughout the history of high technology.