It seems that the notion of exposure keeps getting confused by many, and for that reason we keep getting “new” articles trying to address it (including this one). For the most part, people try to make exposure more difficult than it is. Us film old-timers tend to understand it better.

Three things determine “exposure”:

- The brightness of the scene being photographed

- The aperture used

- The shutter speed used

These days I use the shorthand EXPOSURE = LIGHT filtered by APERTURE filtered by SHUTTER SPEED. In other words, exposure is the set of photons that actually make it to your image sensor. Note that this is not the same “exposure” you’ve probably been told about.

Together, the above three things determine how much light falls on the sensor (or film). (Technically, if you put a filter in front of the lens, that would impact exposure, as well, as any glass/air surface, even coated, is going to reduce light moving through towards the sensor. Some filters, such as neutral density filters, are designed to reduce light that gets to the lens, and thus, the sensor.)

Please re-read that numbered list until you’ve committed it to memory. “How much light falls on the sensor” is the key to understanding a wealth of what happens in the image quality realm. Three things determine exposure. I’ll refer to them again several times later in this article, so make sure you know what they are.

Side note: Aperture is a bit of a tricky wicket. Here I’m using f/numbers, which are theoretical, instead of t/stops, which are actual. Also, the physical size of an aperture scales with the capture size (i.e. f/2 for m4/3 is physically smaller than f/2 for FX; this has ramifications down the line, but in terms of “exposure,” f/2 is f/2: we get a normalized amount of light to the sensor per square millimeter).

The resulting exposure—again, how much light gets to the sensor—will have some level of noise to it: the randomness of photons. Once we record the data we may add noise caused in converting/reading the analog data to digital (read noise), and component noise (fixed pattern noise, heat noise, amp noise, etc.). The accuracy of the Analog-to-Digital circuit and how you take its output and convert that into integer numbers you will pack into some number of bits can produce rounding errors. I mention rounding errors for a reason: they, too, are ultimately a form of “noise.”

One of the noise types I just mentioned generally is stronger than the others in your data and becomes the primary contributor to noise in the final results. Sometimes quantum shot noise (random photons) dominates (not much light reached the sensor; a low exposure), sometimes read noise dominates (enough light was received but it wasn’t perfectly converted), and in a few cases component noise dominates (in very hot climates when the sensor itself is running hot, and especially for long exposures, for example). It’s possible for a really non-linear ADC or packing the resulting values from the ADC into too few bits can also be the dominant source of noise, but this really isn’t something I’ve seen in recent years with digital gear. Finally, note that many converters have lots of “noise producers” in them due to the precision of calculations being performed and the manner in which they’re done (everything from color space model to the demosaicing itself). I’m going to ignore these last things for this article, as they deserve an article of their own.

Nikon has had a noise source calculator for many years on their Web site. It’s on the microscope Web site, which is why you’ve probably never seen it. But if you’ve got Java enabled in your browser, it’s actually instructive to play with different noise contributors and see what happens.

The notion that small-photosite cameras (e.g. D850, Z8) produce more noise than large-photosite cameras (e.g. Df or D4) is mostly misguided. If the exposure hitting the same area is the same—as it would be photographing the same image with a Df and D850 with the same aperture and shutter speed—the size of the photosite generally isn’t a factor in the amount of noise in the final image. (It can be a small factor because a part of the area of the photosite is not used for light collection; but in current Sony/Nikon sensors using 65nm process fabs with 100% microlenses or back-side illumination, this isn’t really an issue, as the entire amount of light getting to the sensor generally is seen.) What more photosites in the same sensor size generally give you is not “more noise” but rather “same overall noise with better spatial resolution.”

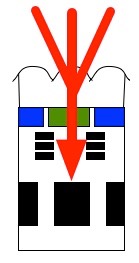

In the illustration at left, light rays coming in at different angles (red) are focused through microlenses (top) to get into a single photosite. Virtually all light is collected, even though the area actually responsive to light doesn’t correspond to the entire area of the photosite itself.

The black parts in this illustration are data lines, power lines, data collectors, and other “active” parts of the sensor. The blue and green blocks here represent Bayer filtration.

However, if we use a smaller sensor (e.g. DX instead of FX), then indeed the same exposure results in less overall light getting to the sensor as there’s less area collecting the light. The same amount of light gets to the same area within the two sensors (e.g. the FX sensors’ DX crop area), but the total sensor area is larger for FX than it is for DX. Thus if you’re photographing without a crop enabled the FX sensor is collecting more light than the DX one. Therefore a 24mp DX camera photographing a fixed scene with a given aperture and shutter speed will not collect as much light as an FX camera looking at the same fixed scene with the same aperture and shutter speed. If you take photos with a DX crop enabled on an FX camera, then the resulting image area would have the same amount of light hitting the sensor as a DX camera would.

So what happens when we have a scene without much light, have to use a small aperture size, or a fast shutter speed? You’ll probably say, “if I don’t have enough light I just bump up the ISO to get a better exposure.” No. Please go back and read the three aspects of exposure again; I told you not to proceed until you understood it.

ISO is not part of the exposure. Rather, it’s basically a form of taking a dim exposure and amplifying the data in that exposure so that it is “brighter.” Instead of using a slider in Lightroom or another program to do that, you’re telling the camera to do the same thing to the original data coming off the sensor. I won’t get into the reasons why we’d rather have the camera do that in most situations rather than using post processing software, but changing ISO does not change your exposure. The same amount of light still hits the sensor no matter what ISO you set. The sensor doesn’t get “more sensitive.”

Another aside: To be perfectly correct: a few sensors do perform tweaks to change how they react to light at different levels, but most cameras today use what we’d call “ISOless sensors”. Most Canon DSLRs, plus the Nikon D2h/D2hs, D3/D3s, D4/D4s, and Df, do something my friend Iliah Borg calls ISOfull: they get a slight improvement in noise when ISO is bumped because they have a pre-amplification stage that comes before the ADC that makes the final data a bit better. Most recent cameras also have dual-gain processes in the image sensor. However dual-gain is not making the sensor more responsive to light, it’s just adjusting the way the amplification of the recorded data is handled in a way that makes the data a bit better.

Note that exposure compensation does change the exposure ;~). That’s because it alters either the aperture or the shutter speed value, and that will let more or less light get to the sensor.

Eventually, we have to take our exposure (light that hit the sensor) and change that into something useful visually. Since digital cameras collect data linearly but the way we present them requires changes for viewing conditions (gamma), we have to apply some sort of modification to the data in order to create the output we typically call an image. We may have to “brighten” the mid-tone exposure (which is one reason why having ISO in the camera is useful: if we know that we need to brighten everything, we can just have the camera start the process).

Finally, let’s talk underexposure and overexposure.

Overexposure is easy: digital cameras (currently) have a limit to how much exposure they can record. When a photosite “fills up” and hits its saturation limit, it can’t hold any more exposure. In digital, over exposure is truncation of highlight information. Worse still, we collect light in separate spectral components (red, green, blue), so it’s possible to truncate just one color’s highlight information (or two, or all three).

Underexposure is a much more elaborate topic. Technically, at the extreme we might not have the ability to distinguish between two extremely dim amounts of light hitting the sensor. If we only received two or three photons, if the sensor had a 50% efficiency, and if the underlying read and fixed pattern noise was four or five electrons, we can’t distinguish between two or three photons coming in because the data is beneath the noise floor. So, yes, you can underexpose and essentially truncate shadow information. One of the things I try to test with all new cameras that come in is just where I lose any useful ability to distinguish between exposures on the underexposure side of things. Coupled with my knowledge of where the sensor gets saturated with an exposure, I then know the two extremes between which I can record useful data.

Most people, however, refer to underexposed images when what they really have are images that just have a low exposure. They’re complaining about the brightness of the viewable image when it is “processed normally;” you see an image darker than you wish it to be. I’d also point out that the deep shadow data might have fallen below what we can usefully employ in our output. Some are savvy enough to understand that a dim exposure (but one with a full set of useful and non-truncated data, i.e. potentially optimal data) likely has more noise in it and thus should be avoided, if possible. Just don’t fall into the mistake of thinking that “bumping the ISO” fixes that problem. It does not. The things that make a dim exposure better are (1) changing the scene brightness (add light), (2) changing the aperture (opening up to a wider stop), or (3) changing the shutter speed (slowing the capture speed).

Which brings me to why I complained so much about Nikon’s DX lenses and am not a great fan of Sony’s decision to mostly create f/4 zooms in their mirrorless systems initially: one of the ways I can get a better exposure is by opening up the aperture. But when the lens stops at f/3.5, f/4, or f/5.6, I often can’t do that, can I? That means that I only have two methods of improving the exposure: adding light or lengthening the shutter speed.